Summary of 4_Xgboost

Extreme Gradient Boosting (Xgboost)

- n_jobs: 6

- objective: multi:softprob

- eta: 0.1

- max_depth: 8

- min_child_weight: 1

- subsample: 1.0

- colsample_bytree: 1.0

- eval_metric: f1

- num_class: 6

- explain_level: 1

Validation

- validation_type: kfold

- k_folds: 5

- shuffle: True

- stratify: True

- random_seed: 42

Optimized metric

f1

Training time

37.9 seconds

Metric details

| 0 | 1 | 2 | 3 | 4 | 5 | accuracy | macro avg | weighted avg | logloss | |

|---|---|---|---|---|---|---|---|---|---|---|

| precision | 0.9642 | 0.964194 | 0.972862 | 0.983607 | 0.992333 | 0.998953 | 0.978925 | 0.979358 | 0.979078 | 0.0661003 |

| recall | 0.984565 | 0.965429 | 0.969388 | 0.987654 | 0.990705 | 0.972491 | 0.978925 | 0.978372 | 0.978925 | 0.0661003 |

| f1-score | 0.974277 | 0.964811 | 0.971122 | 0.985626 | 0.991518 | 0.985545 | 0.978925 | 0.978817 | 0.978948 | 0.0661003 |

| support | 2462 | 1562 | 1960 | 1944 | 1829 | 1963 | 0.978925 | 11720 | 11720 | 0.0661003 |

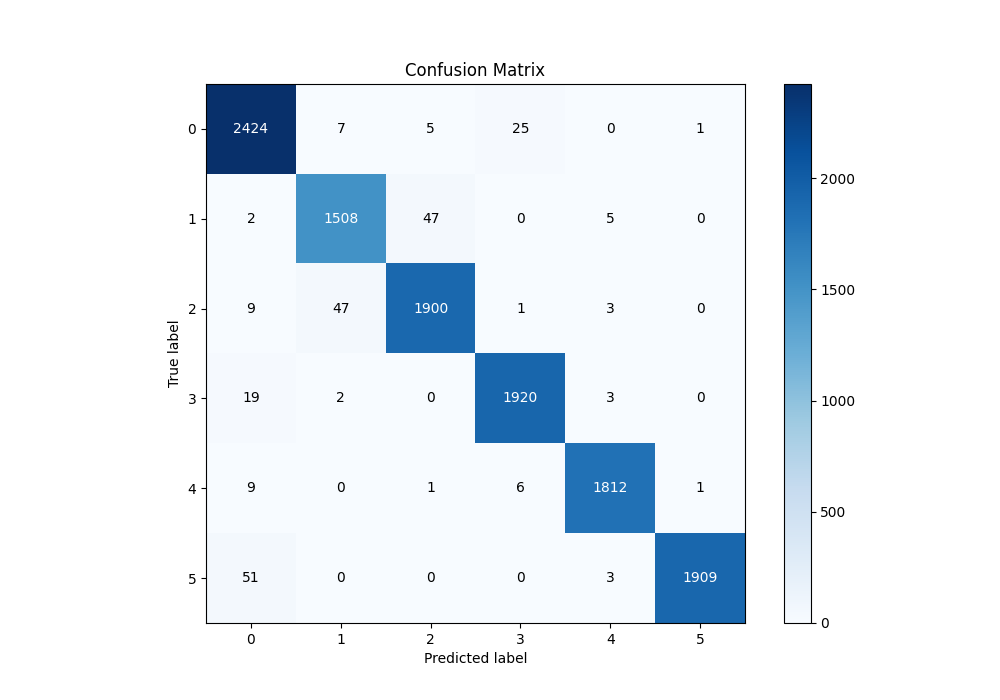

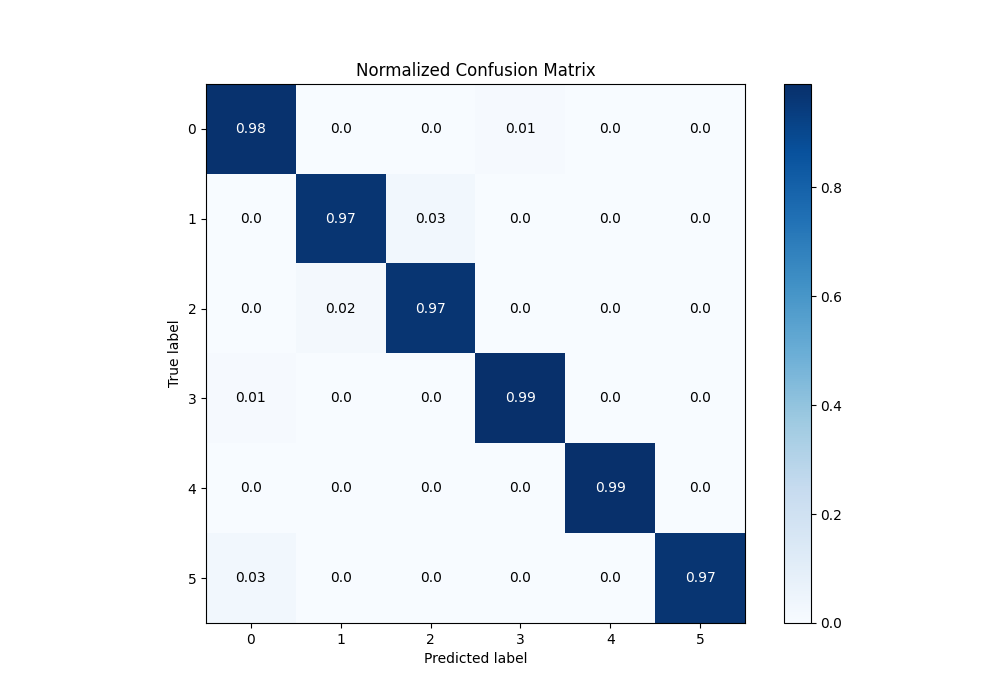

Confusion matrix

| Predicted as 0 | Predicted as 1 | Predicted as 2 | Predicted as 3 | Predicted as 4 | Predicted as 5 | |

|---|---|---|---|---|---|---|

| Labeled as 0 | 2424 | 7 | 5 | 25 | 0 | 1 |

| Labeled as 1 | 2 | 1508 | 47 | 0 | 5 | 0 |

| Labeled as 2 | 9 | 47 | 1900 | 1 | 3 | 0 |

| Labeled as 3 | 19 | 2 | 0 | 1920 | 3 | 0 |

| Labeled as 4 | 9 | 0 | 1 | 6 | 1812 | 1 |

| Labeled as 5 | 51 | 0 | 0 | 0 | 3 | 1909 |

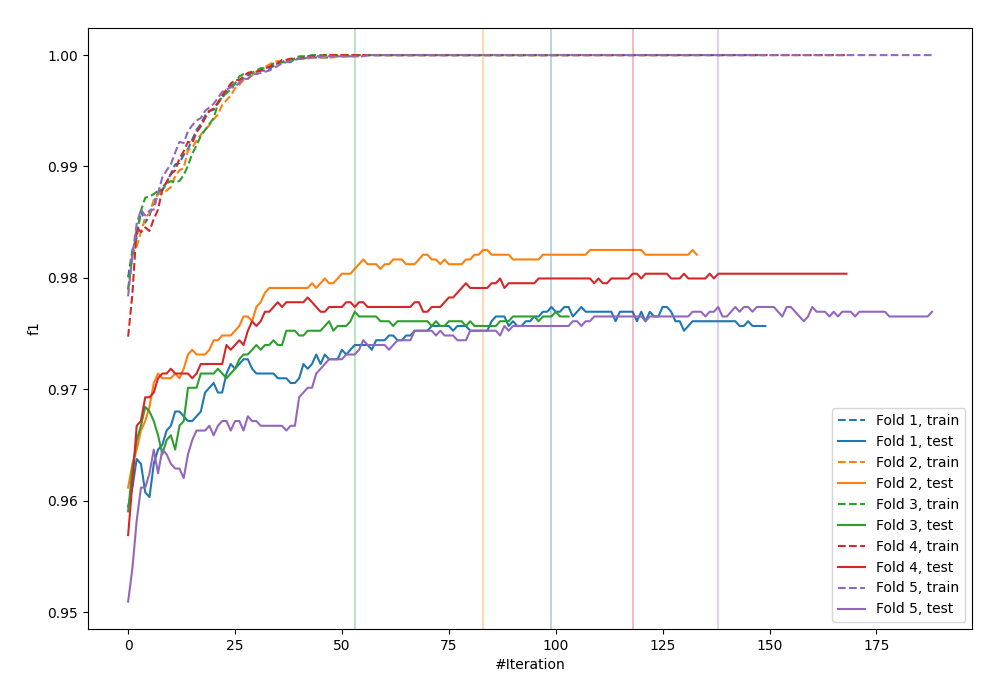

Learning curves

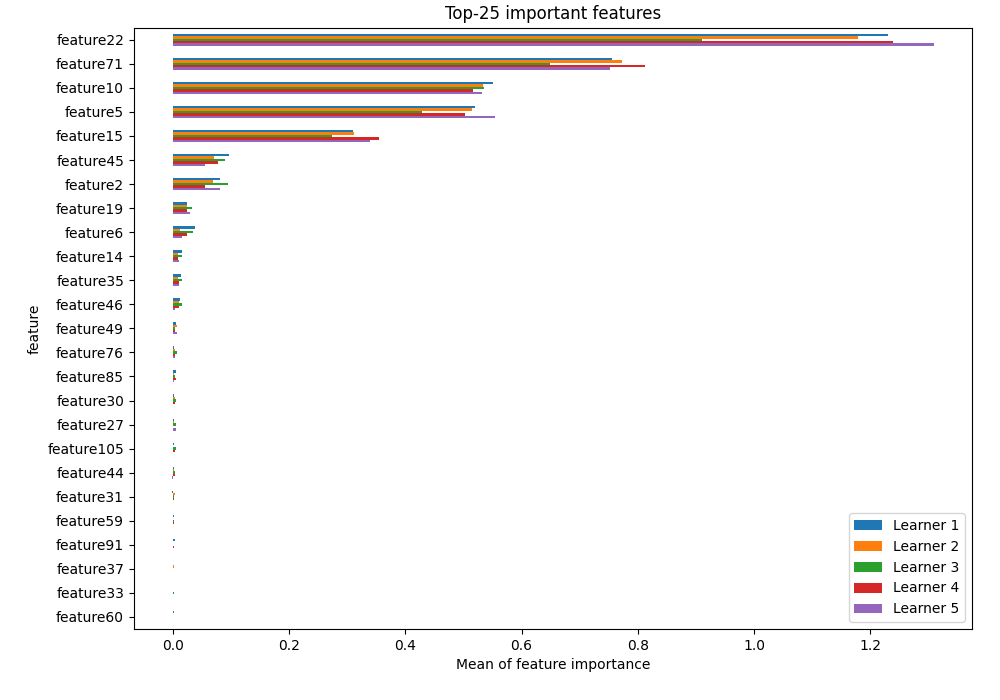

Permutation-based Importance

Confusion Matrix

Normalized Confusion Matrix

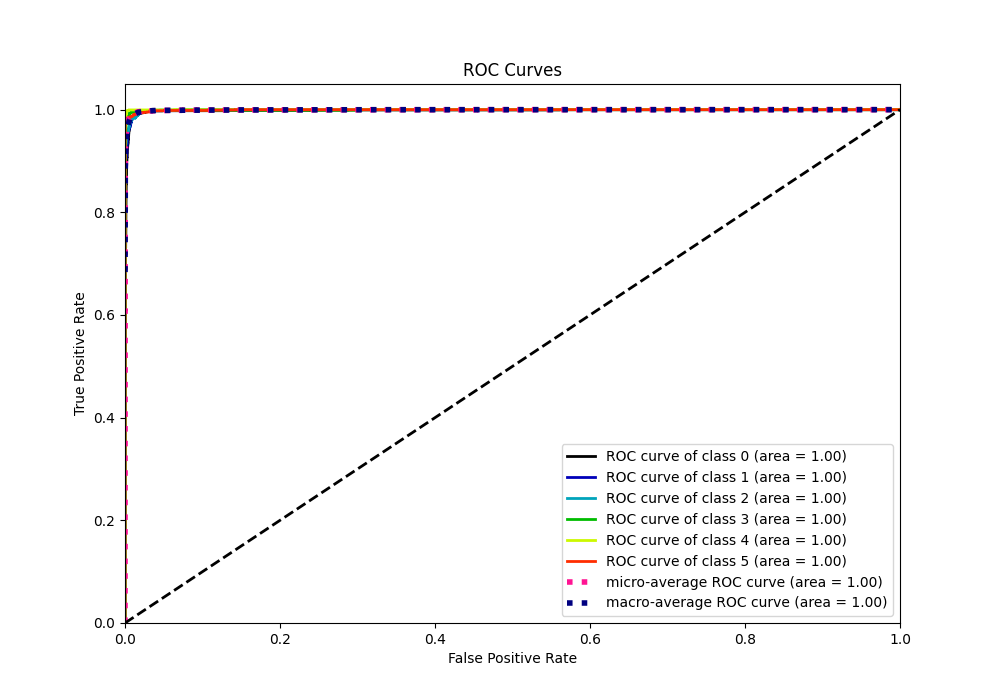

ROC Curve

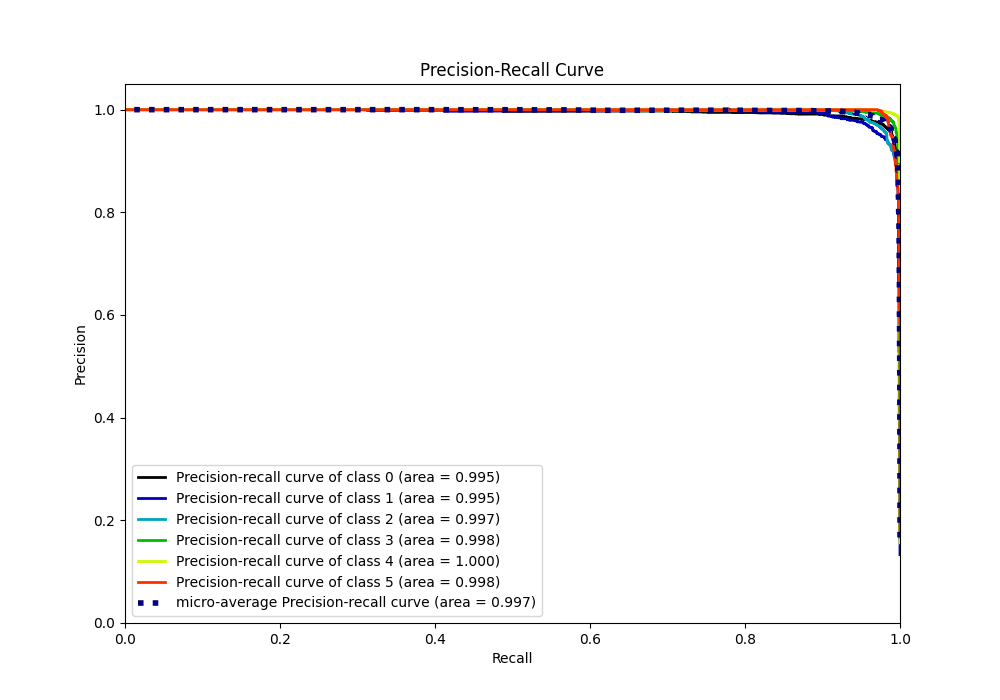

Precision Recall Curve