Summary of 3_Xgboost

Extreme Gradient Boosting (Xgboost)

- n_jobs: 6

- objective: multi:softprob

- eta: 0.075

- max_depth: 8

- min_child_weight: 5

- subsample: 1.0

- colsample_bytree: 1.0

- eval_metric: f1

- num_class: 6

- explain_level: 1

Validation

- validation_type: kfold

- k_folds: 5

- shuffle: True

- stratify: True

- random_seed: 42

Optimized metric

f1

Training time

36.3 seconds

Metric details

| 0 | 1 | 2 | 3 | 4 | 5 | accuracy | macro avg | weighted avg | logloss | |

|---|---|---|---|---|---|---|---|---|---|---|

| precision | 0.965723 | 0.965894 | 0.970438 | 0.983103 | 0.99232 | 0.997916 | 0.97884 | 0.979233 | 0.97896 | 0.0662399 |

| recall | 0.984159 | 0.960948 | 0.971429 | 0.987654 | 0.989065 | 0.975548 | 0.97884 | 0.978134 | 0.97884 | 0.0662399 |

| f1-score | 0.974854 | 0.963415 | 0.970933 | 0.985373 | 0.99069 | 0.986605 | 0.97884 | 0.978645 | 0.978858 | 0.0662399 |

| support | 2462 | 1562 | 1960 | 1944 | 1829 | 1963 | 0.97884 | 11720 | 11720 | 0.0662399 |

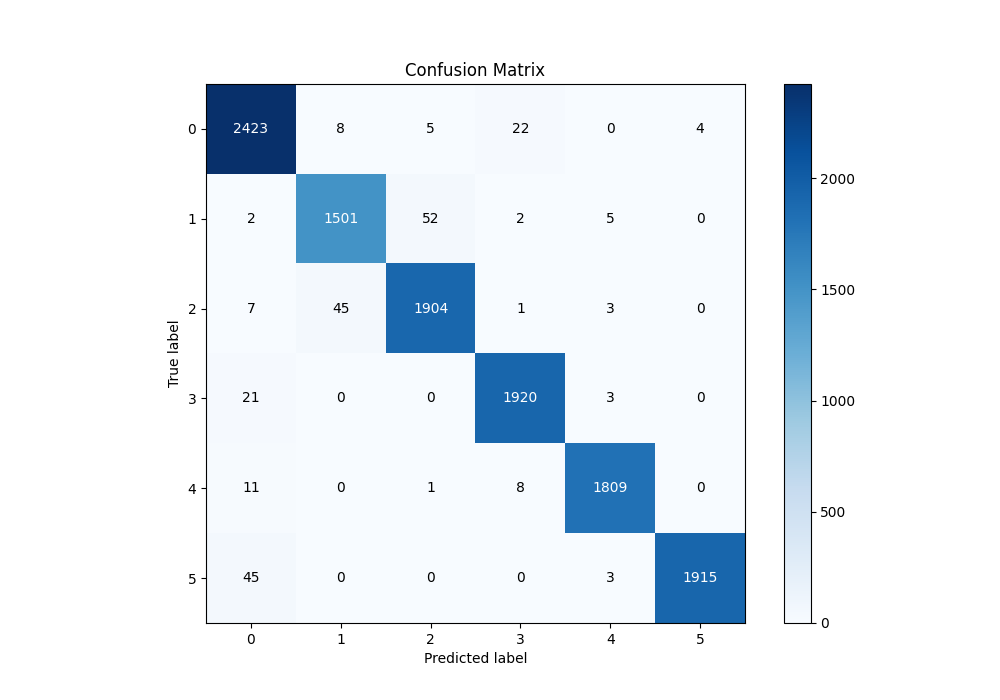

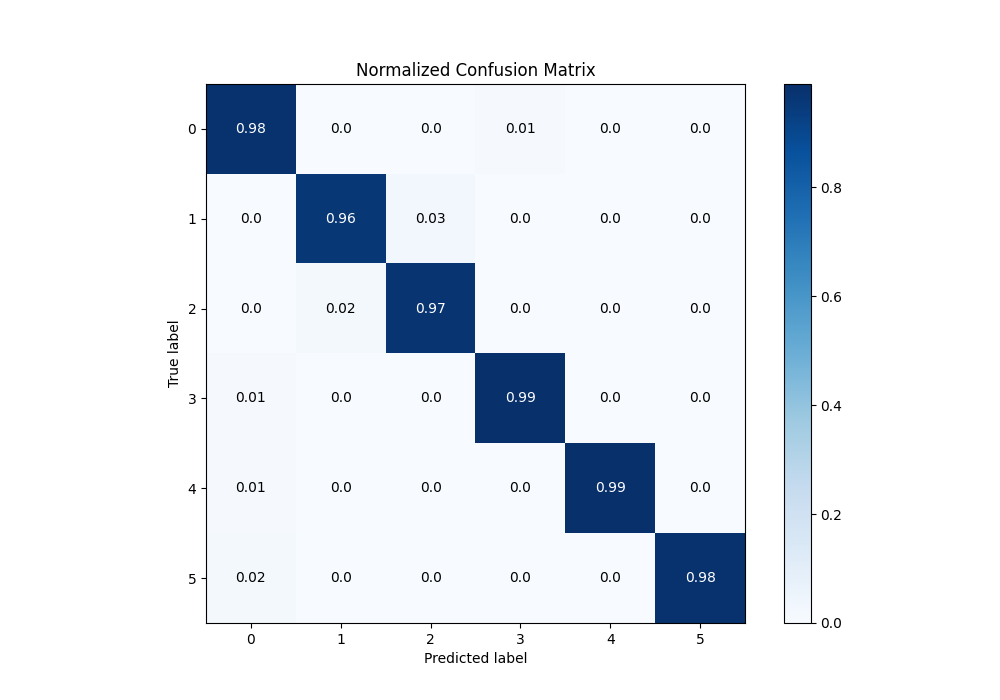

Confusion matrix

| Predicted as 0 | Predicted as 1 | Predicted as 2 | Predicted as 3 | Predicted as 4 | Predicted as 5 | |

|---|---|---|---|---|---|---|

| Labeled as 0 | 2423 | 8 | 5 | 22 | 0 | 4 |

| Labeled as 1 | 2 | 1501 | 52 | 2 | 5 | 0 |

| Labeled as 2 | 7 | 45 | 1904 | 1 | 3 | 0 |

| Labeled as 3 | 21 | 0 | 0 | 1920 | 3 | 0 |

| Labeled as 4 | 11 | 0 | 1 | 8 | 1809 | 0 |

| Labeled as 5 | 45 | 0 | 0 | 0 | 3 | 1915 |

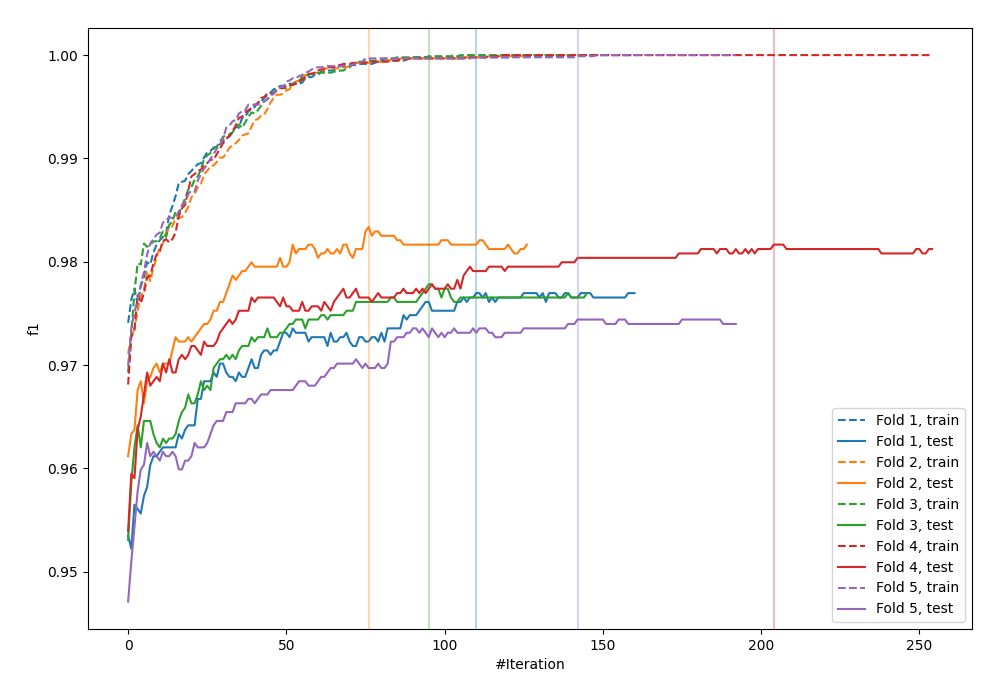

Learning curves

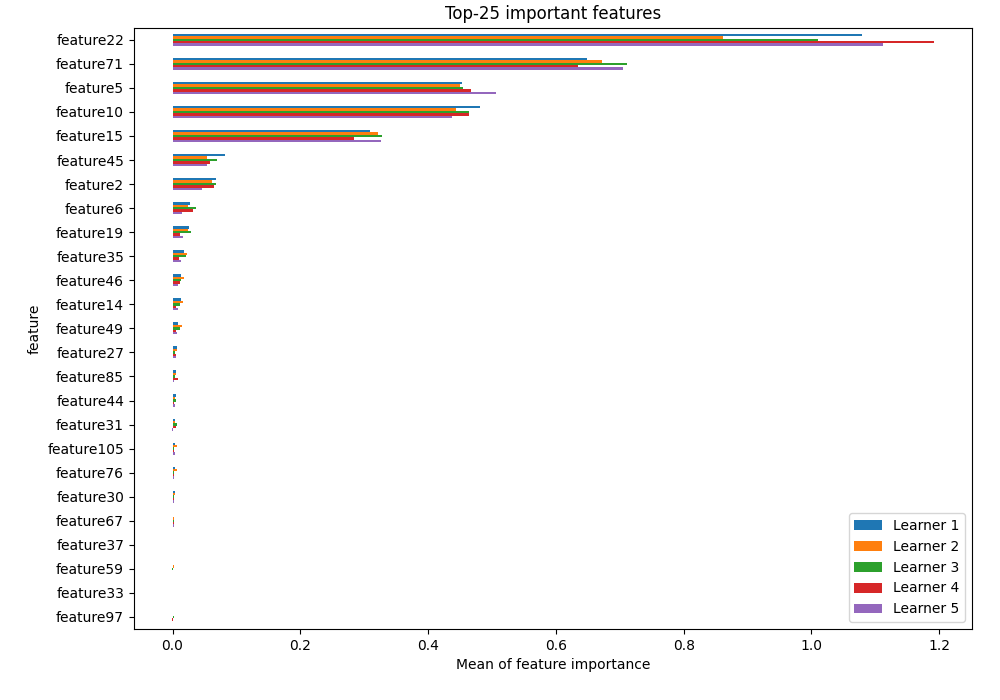

Permutation-based Importance

Confusion Matrix

Normalized Confusion Matrix

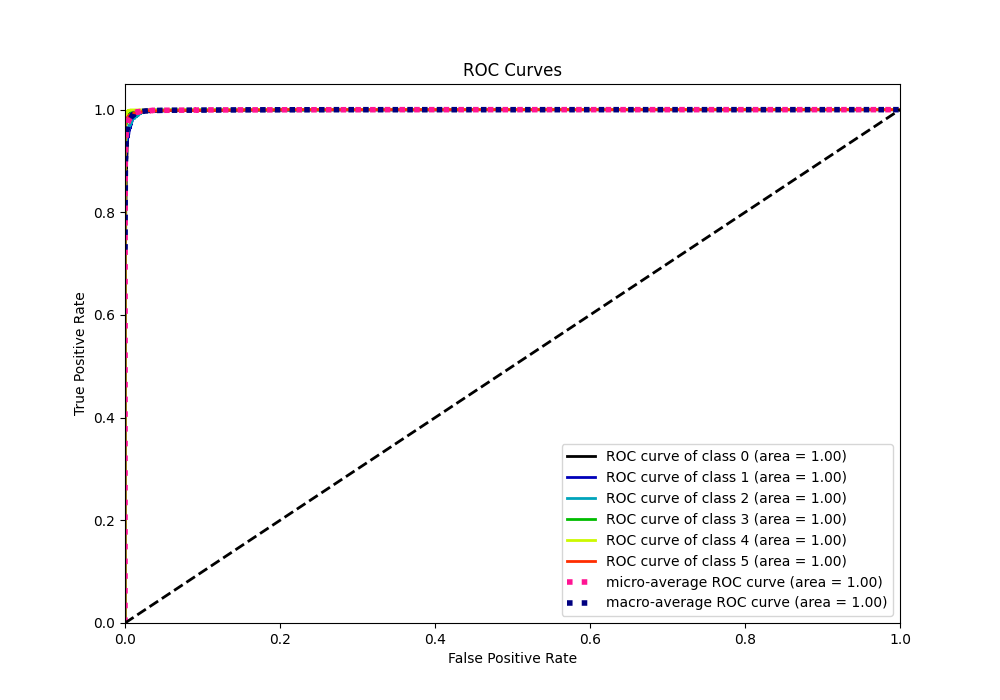

ROC Curve

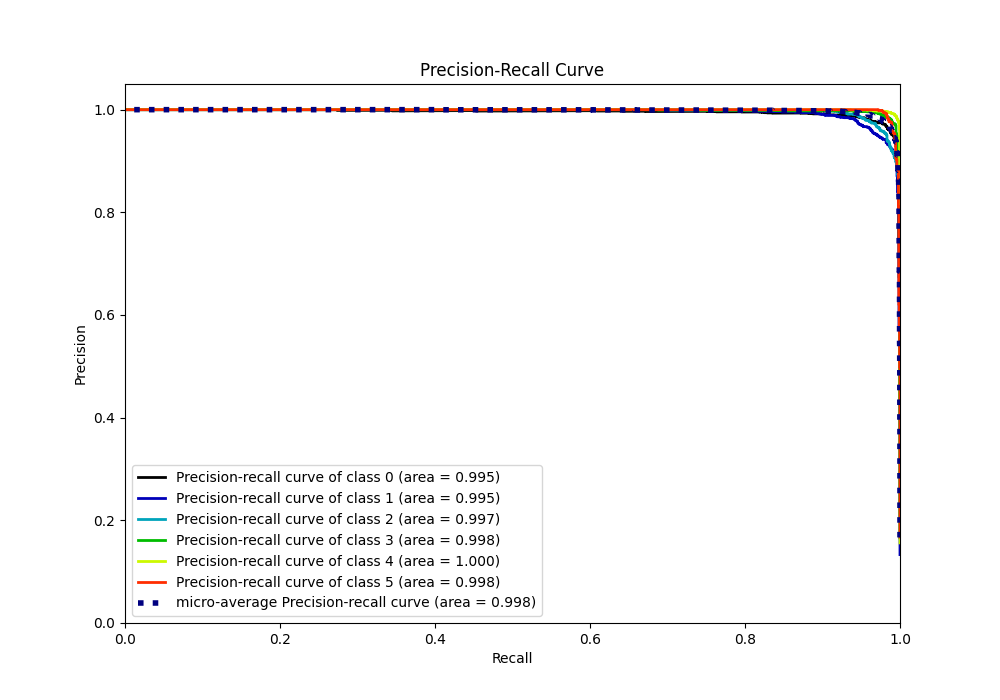

Precision Recall Curve