Summary of 18_Xgboost

Extreme Gradient Boosting (Xgboost)

- n_jobs: 6

- objective: multi:softprob

- eta: 0.1

- max_depth: 5

- min_child_weight: 1

- subsample: 1.0

- colsample_bytree: 1.0

- eval_metric: f1

- num_class: 6

- explain_level: 1

Validation

- validation_type: kfold

- k_folds: 5

- shuffle: True

- stratify: True

- random_seed: 42

Optimized metric

f1

Training time

36.4 seconds

Metric details

| 0 | 1 | 2 | 3 | 4 | 5 | accuracy | macro avg | weighted avg | logloss | |

|---|---|---|---|---|---|---|---|---|---|---|

| precision | 0.970424 | 0.964171 | 0.973347 | 0.98467 | 0.991238 | 0.996877 | 0.980034 | 0.980121 | 0.980121 | 0.067485 |

| recall | 0.98619 | 0.964789 | 0.968878 | 0.991255 | 0.989612 | 0.975548 | 0.980034 | 0.979378 | 0.980034 | 0.067485 |

| f1-score | 0.978243 | 0.96448 | 0.971107 | 0.987952 | 0.990424 | 0.986097 | 0.980034 | 0.979717 | 0.980042 | 0.067485 |

| support | 2462 | 1562 | 1960 | 1944 | 1829 | 1963 | 0.980034 | 11720 | 11720 | 0.067485 |

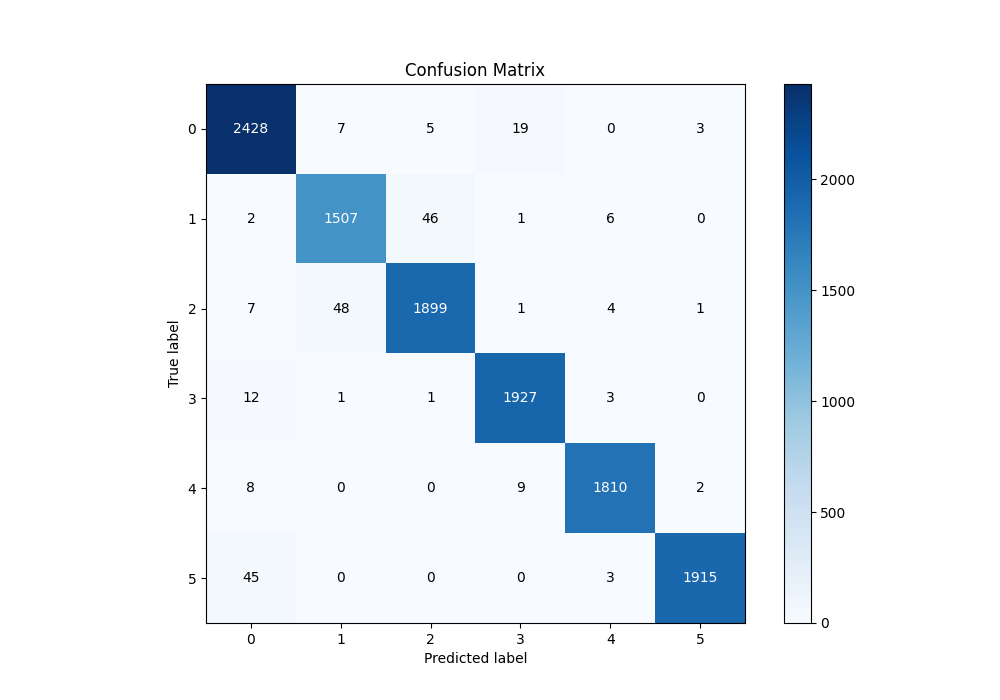

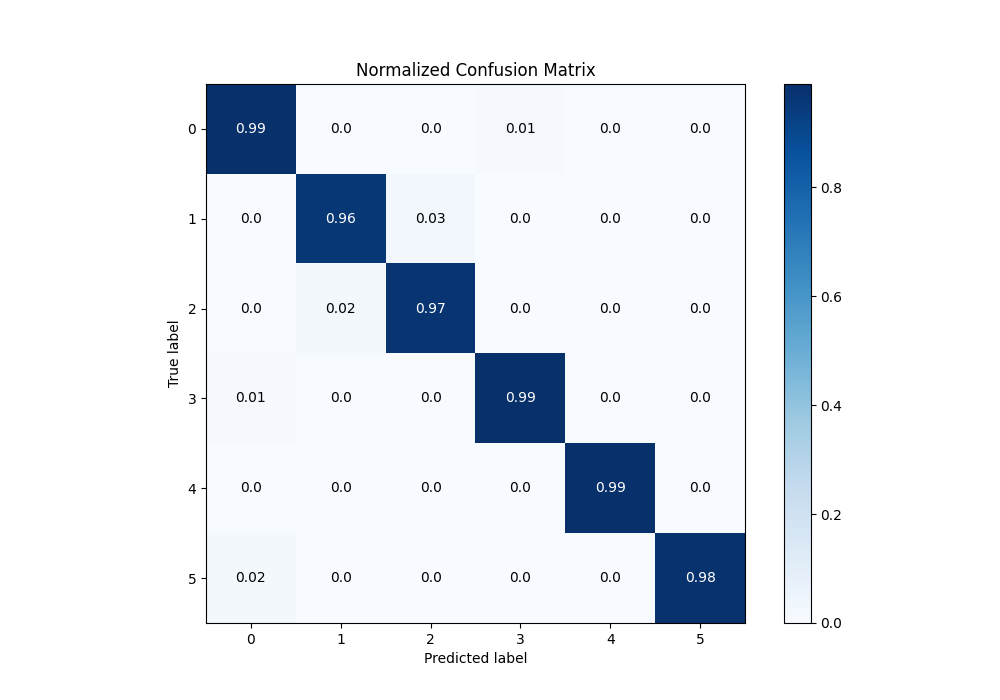

Confusion matrix

| Predicted as 0 | Predicted as 1 | Predicted as 2 | Predicted as 3 | Predicted as 4 | Predicted as 5 | |

|---|---|---|---|---|---|---|

| Labeled as 0 | 2428 | 7 | 5 | 19 | 0 | 3 |

| Labeled as 1 | 2 | 1507 | 46 | 1 | 6 | 0 |

| Labeled as 2 | 7 | 48 | 1899 | 1 | 4 | 1 |

| Labeled as 3 | 12 | 1 | 1 | 1927 | 3 | 0 |

| Labeled as 4 | 8 | 0 | 0 | 9 | 1810 | 2 |

| Labeled as 5 | 45 | 0 | 0 | 0 | 3 | 1915 |

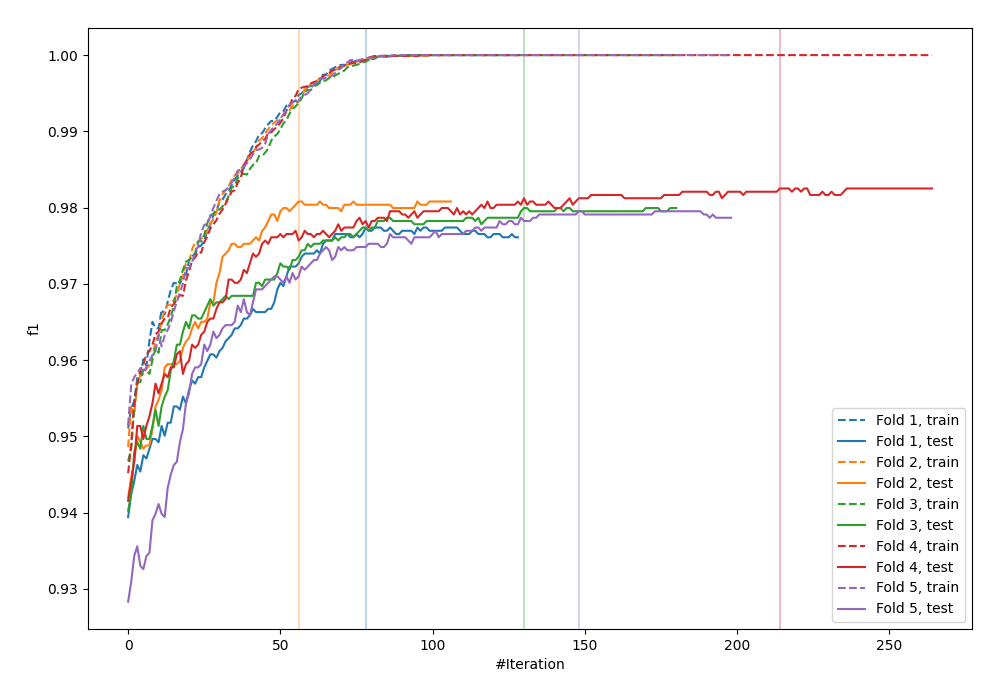

Learning curves

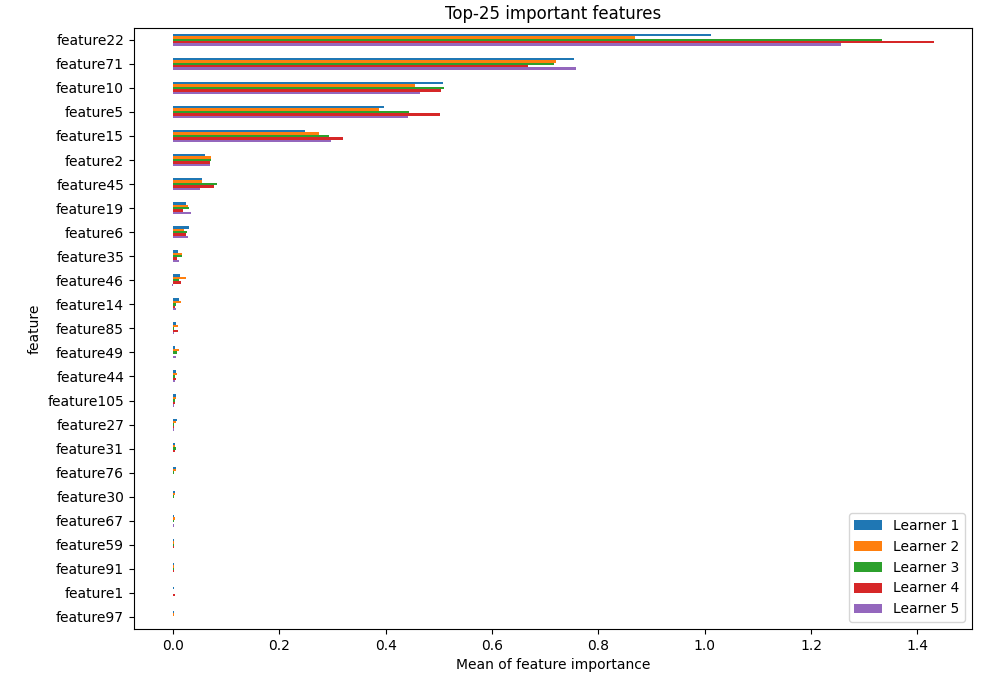

Permutation-based Importance

Confusion Matrix

Normalized Confusion Matrix

ROC Curve

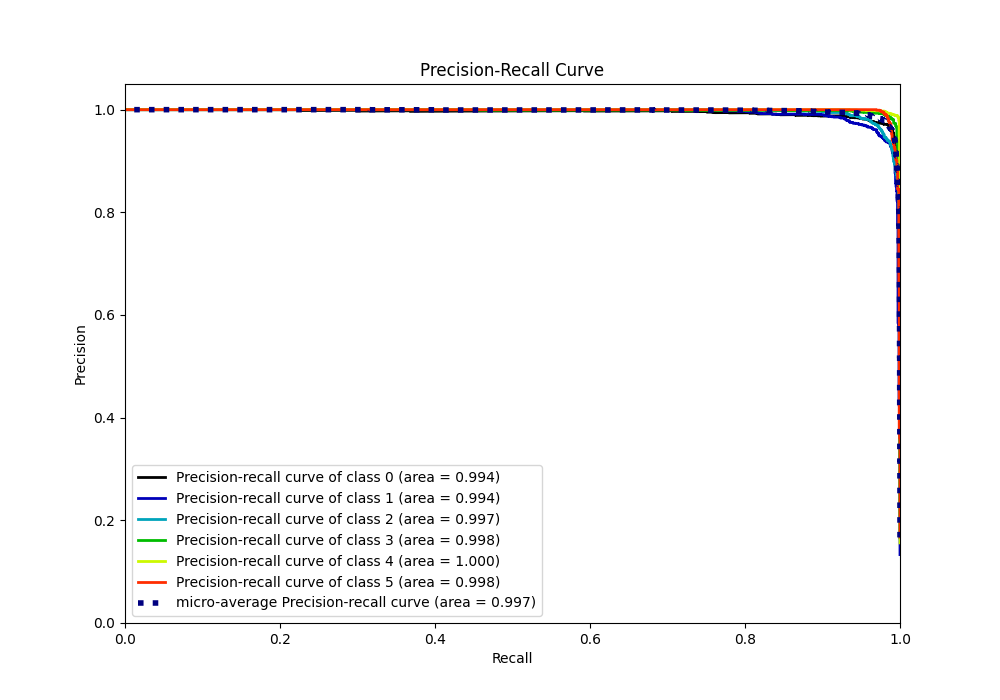

Precision Recall Curve