Summary of 17_Xgboost

Extreme Gradient Boosting (Xgboost)

- n_jobs: 6

- objective: multi:softprob

- eta: 0.05

- max_depth: 5

- min_child_weight: 1

- subsample: 1.0

- colsample_bytree: 1.0

- eval_metric: f1

- num_class: 6

- explain_level: 1

Validation

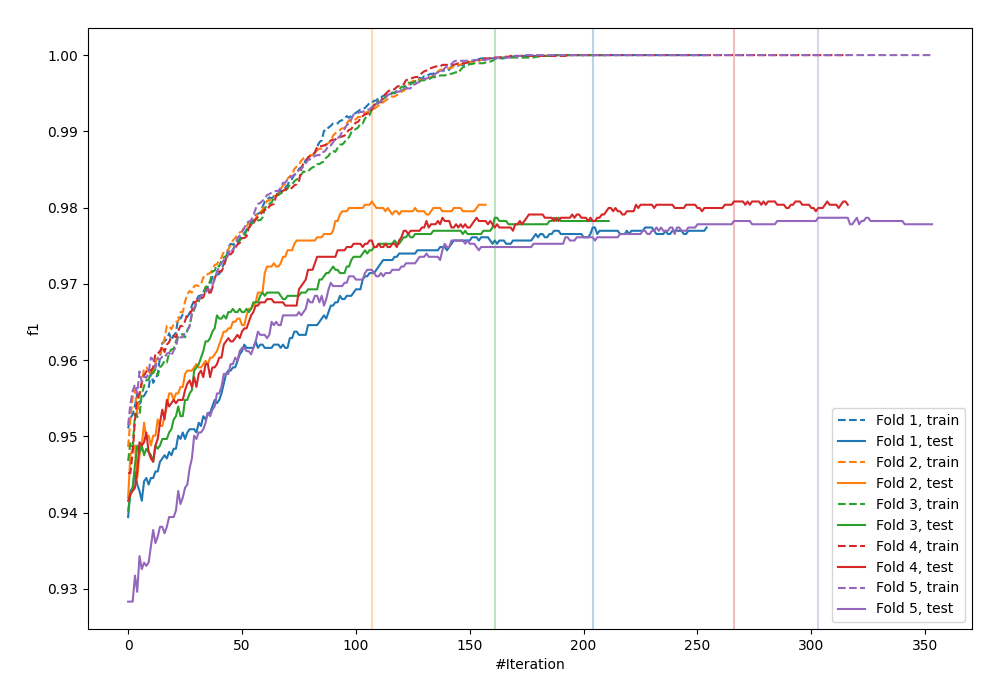

- validation_type: kfold

- k_folds: 5

- shuffle: True

- stratify: True

- random_seed: 42

Optimized metric

f1

Training time

53.0 seconds

Metric details

| 0 | 1 | 2 | 3 | 4 | 5 | accuracy | macro avg | weighted avg | logloss | |

|---|---|---|---|---|---|---|---|---|---|---|

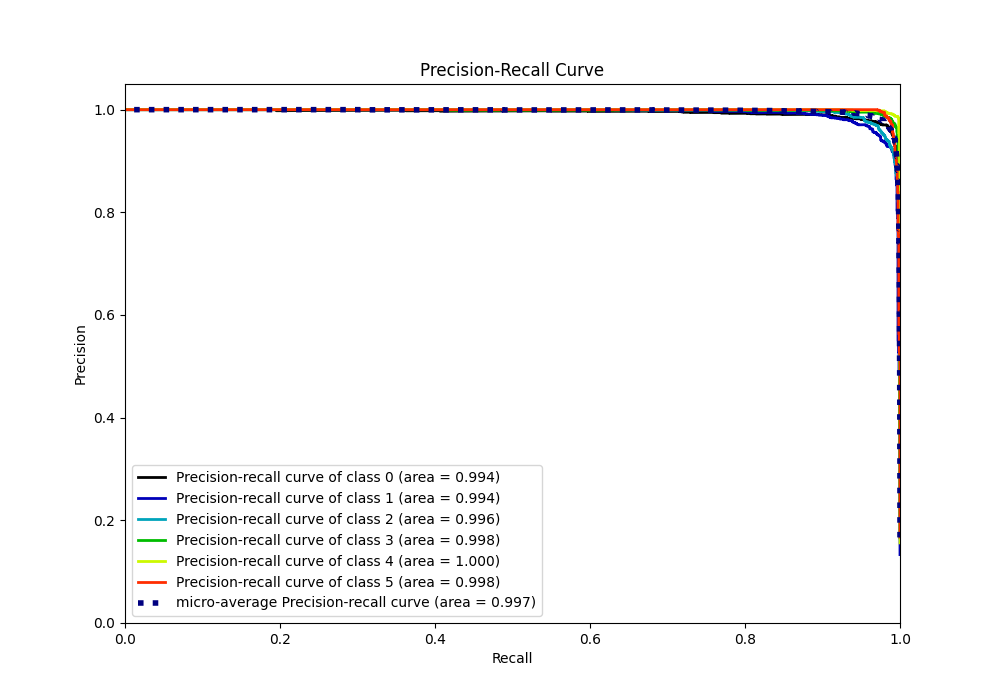

| precision | 0.969261 | 0.96408 | 0.971341 | 0.984151 | 0.990153 | 0.997393 | 0.979266 | 0.979397 | 0.979361 | 0.0701505 |

| recall | 0.98619 | 0.962228 | 0.968367 | 0.990226 | 0.989612 | 0.974529 | 0.979266 | 0.978525 | 0.979266 | 0.0701505 |

| f1-score | 0.977653 | 0.963153 | 0.969852 | 0.987179 | 0.989882 | 0.985828 | 0.979266 | 0.978925 | 0.979274 | 0.0701505 |

| support | 2462 | 1562 | 1960 | 1944 | 1829 | 1963 | 0.979266 | 11720 | 11720 | 0.0701505 |

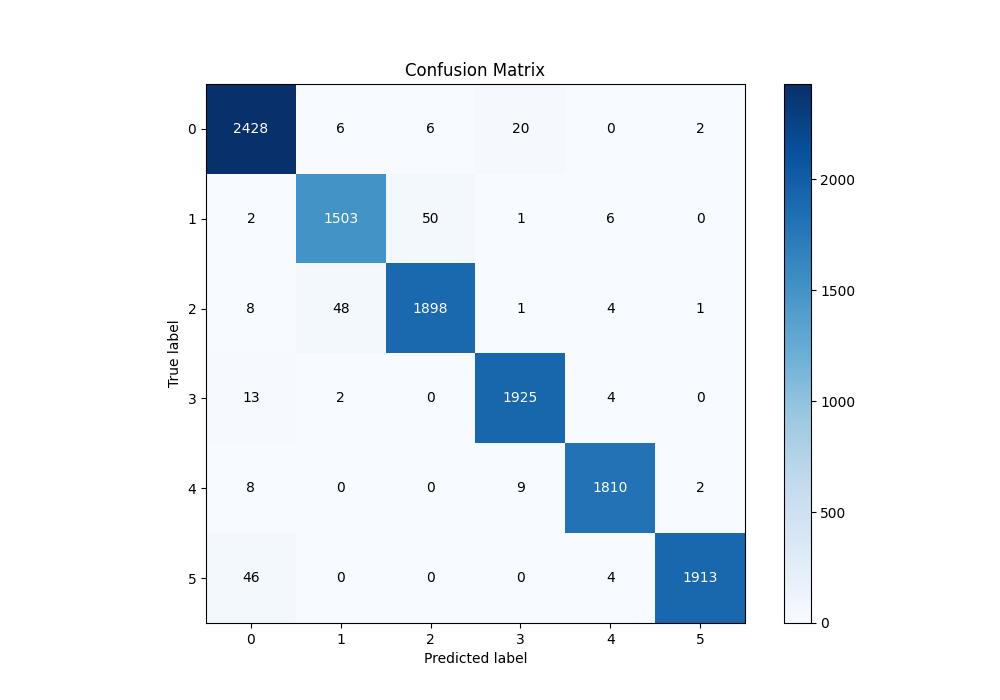

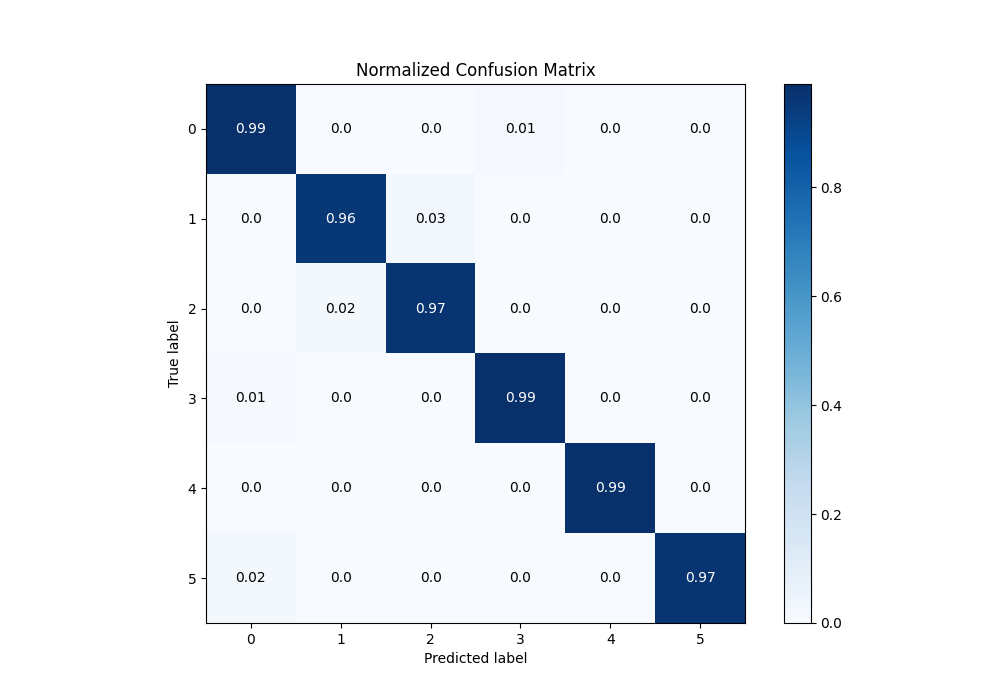

Confusion matrix

| Predicted as 0 | Predicted as 1 | Predicted as 2 | Predicted as 3 | Predicted as 4 | Predicted as 5 | |

|---|---|---|---|---|---|---|

| Labeled as 0 | 2428 | 6 | 6 | 20 | 0 | 2 |

| Labeled as 1 | 2 | 1503 | 50 | 1 | 6 | 0 |

| Labeled as 2 | 8 | 48 | 1898 | 1 | 4 | 1 |

| Labeled as 3 | 13 | 2 | 0 | 1925 | 4 | 0 |

| Labeled as 4 | 8 | 0 | 0 | 9 | 1810 | 2 |

| Labeled as 5 | 46 | 0 | 0 | 0 | 4 | 1913 |

Learning curves

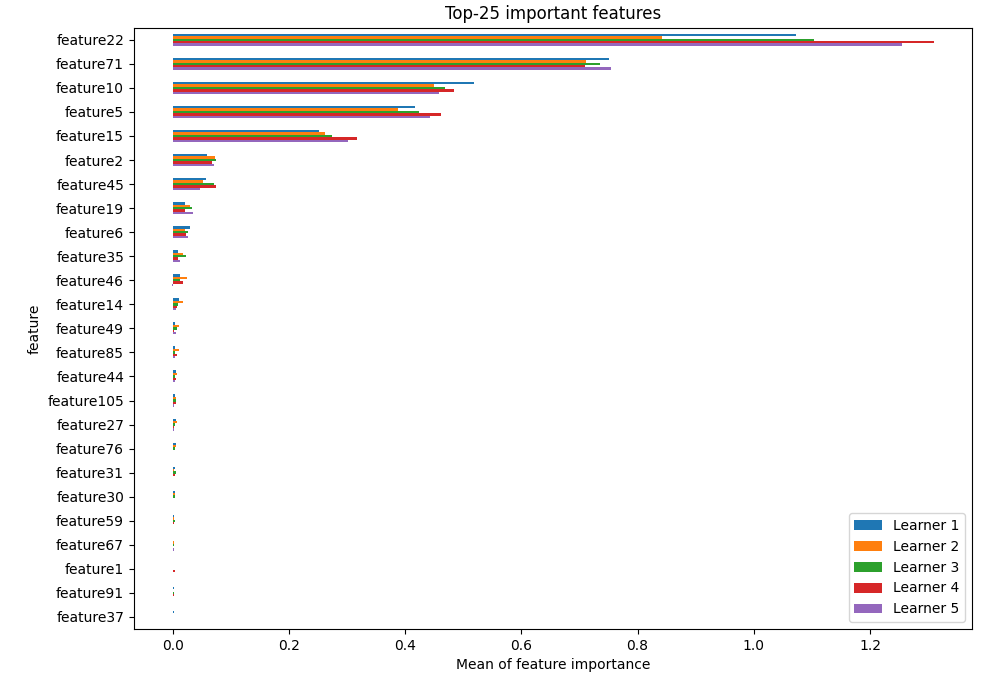

Permutation-based Importance

Confusion Matrix

Normalized Confusion Matrix

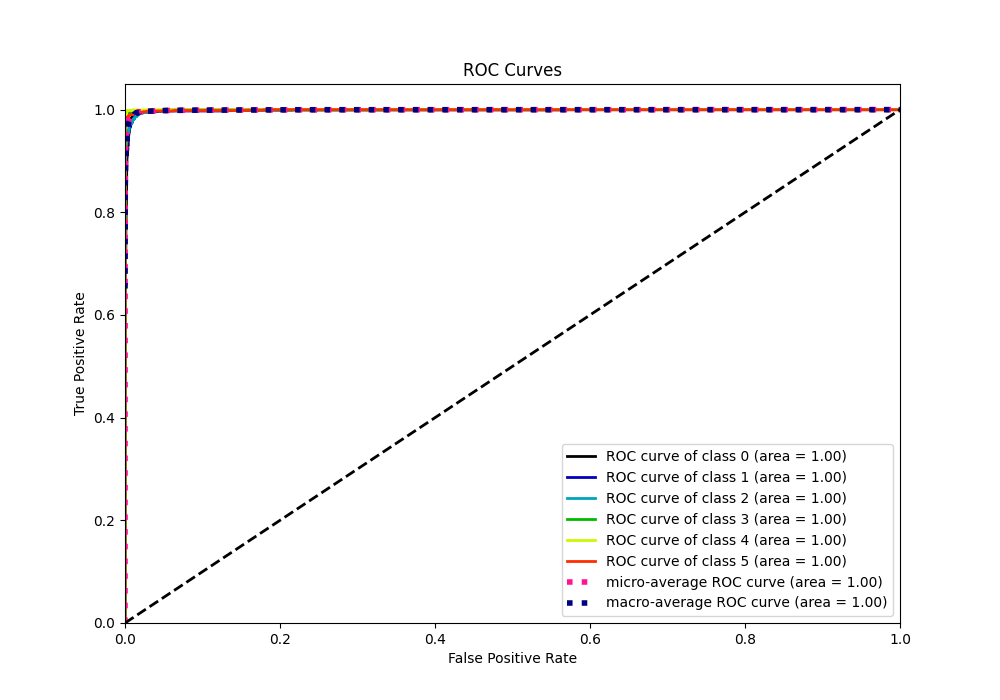

ROC Curve

Precision Recall Curve