Summary of 12_Xgboost

Extreme Gradient Boosting (Xgboost)

- n_jobs: 6

- objective: multi:softprob

- eta: 0.075

- max_depth: 7

- min_child_weight: 1

- subsample: 1.0

- colsample_bytree: 1.0

- eval_metric: f1

- num_class: 6

- explain_level: 1

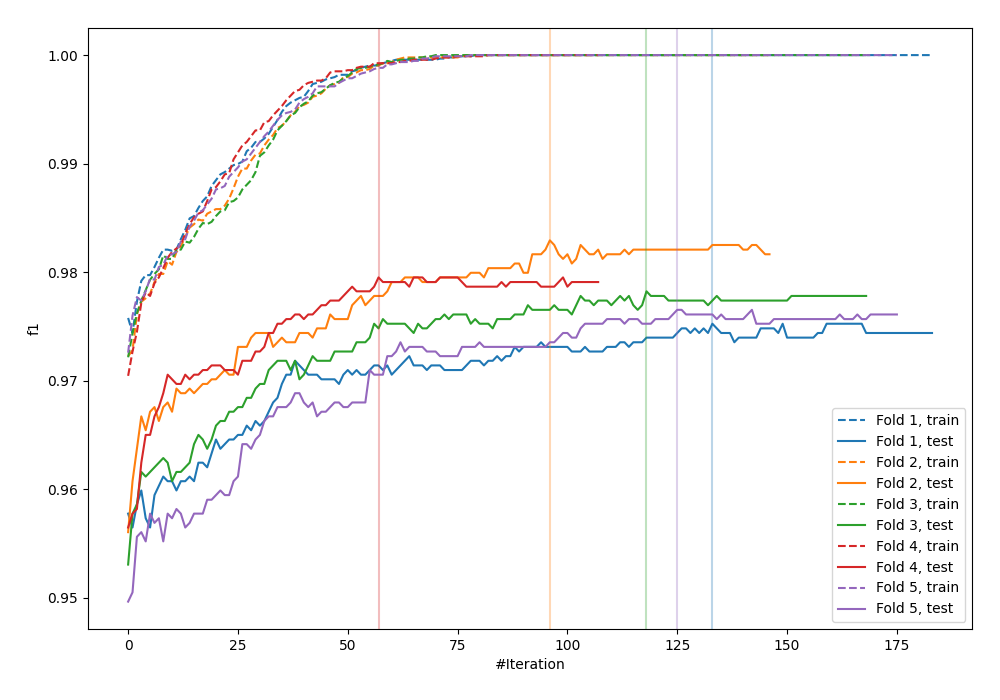

Validation

- validation_type: kfold

- k_folds: 5

- shuffle: True

- stratify: True

- random_seed: 42

Optimized metric

f1

Training time

40.4 seconds

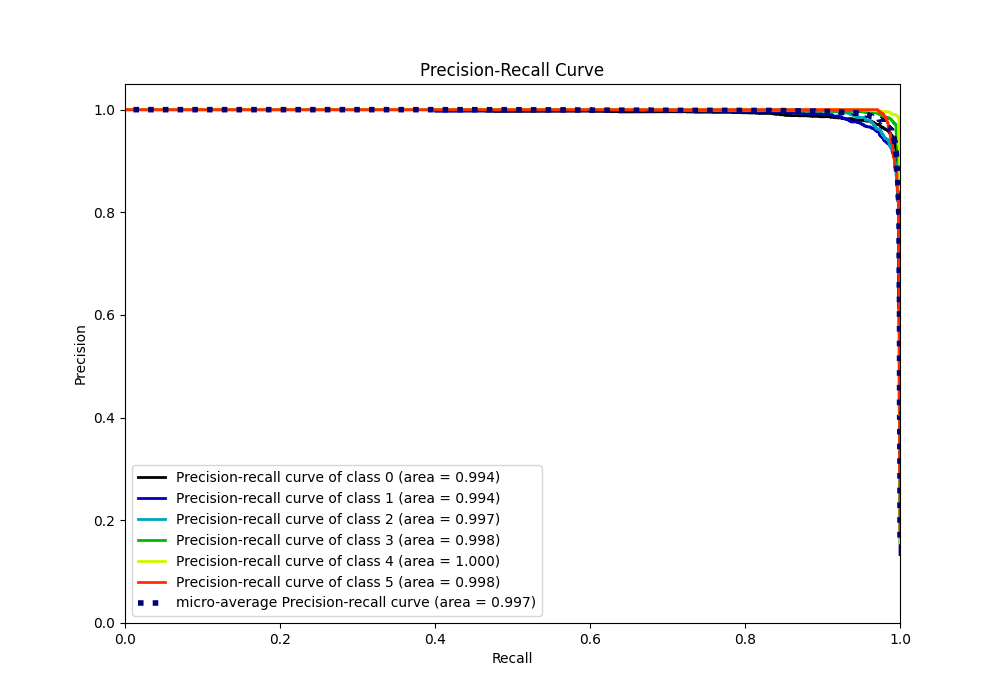

Metric details

| 0 | 1 | 2 | 3 | 4 | 5 | accuracy | macro avg | weighted avg | logloss | |

|---|---|---|---|---|---|---|---|---|---|---|

| precision | 0.96073 | 0.965451 | 0.971867 | 0.984103 | 0.993959 | 0.998953 | 0.978498 | 0.979177 | 0.978686 | 0.0713888 |

| recall | 0.983753 | 0.966069 | 0.969388 | 0.98714 | 0.989612 | 0.971982 | 0.978498 | 0.977991 | 0.978498 | 0.0713888 |

| f1-score | 0.972105 | 0.96576 | 0.970626 | 0.985619 | 0.991781 | 0.985283 | 0.978498 | 0.978529 | 0.978531 | 0.0713888 |

| support | 2462 | 1562 | 1960 | 1944 | 1829 | 1963 | 0.978498 | 11720 | 11720 | 0.0713888 |

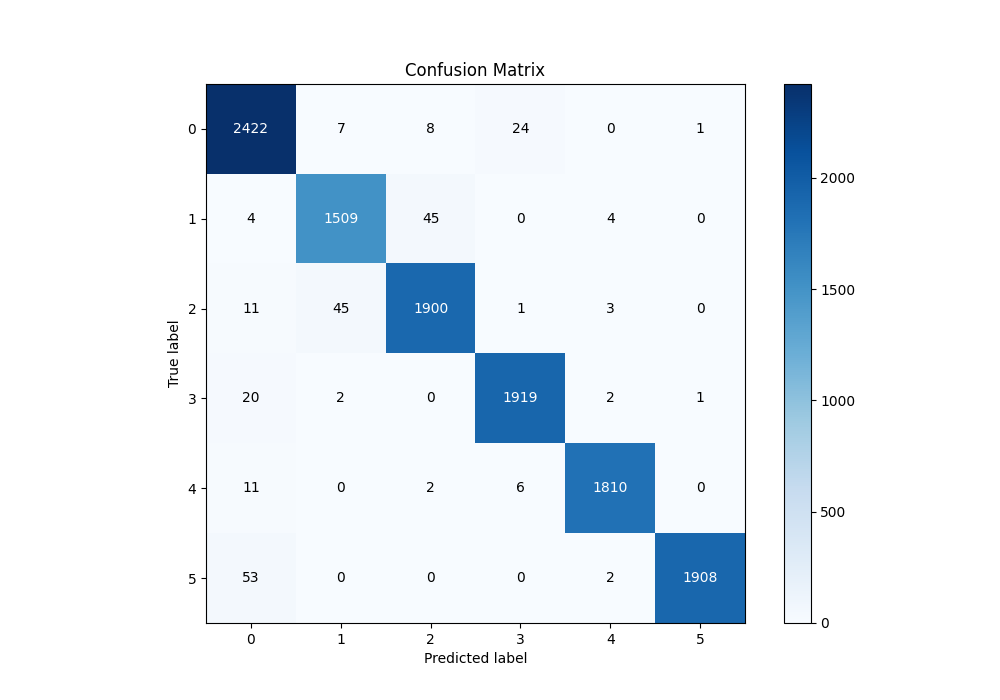

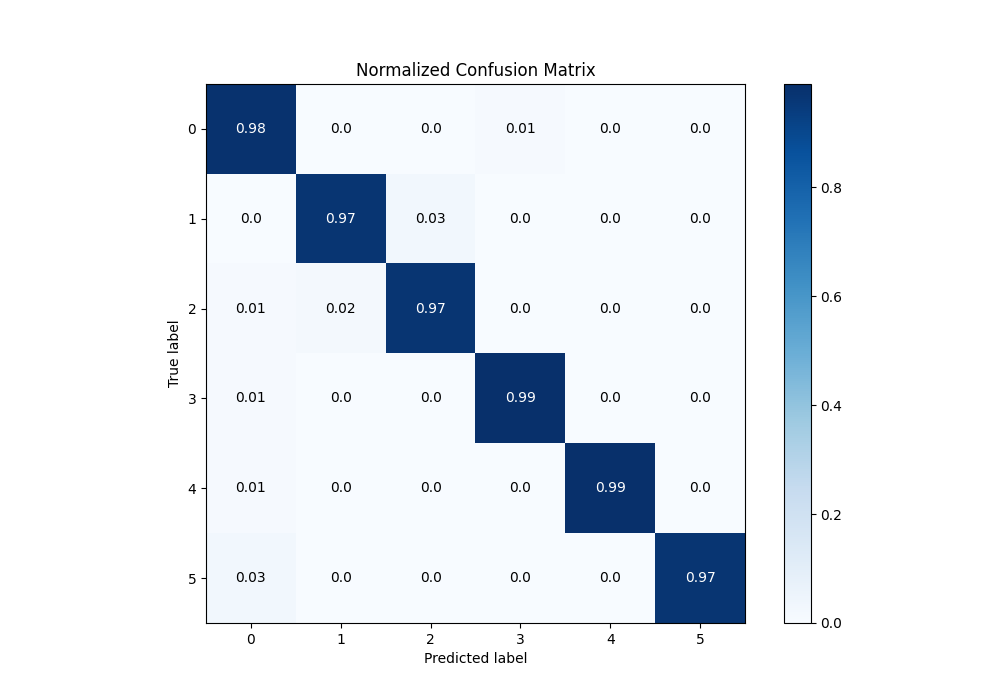

Confusion matrix

| Predicted as 0 | Predicted as 1 | Predicted as 2 | Predicted as 3 | Predicted as 4 | Predicted as 5 | |

|---|---|---|---|---|---|---|

| Labeled as 0 | 2422 | 7 | 8 | 24 | 0 | 1 |

| Labeled as 1 | 4 | 1509 | 45 | 0 | 4 | 0 |

| Labeled as 2 | 11 | 45 | 1900 | 1 | 3 | 0 |

| Labeled as 3 | 20 | 2 | 0 | 1919 | 2 | 1 |

| Labeled as 4 | 11 | 0 | 2 | 6 | 1810 | 0 |

| Labeled as 5 | 53 | 0 | 0 | 0 | 2 | 1908 |

Learning curves

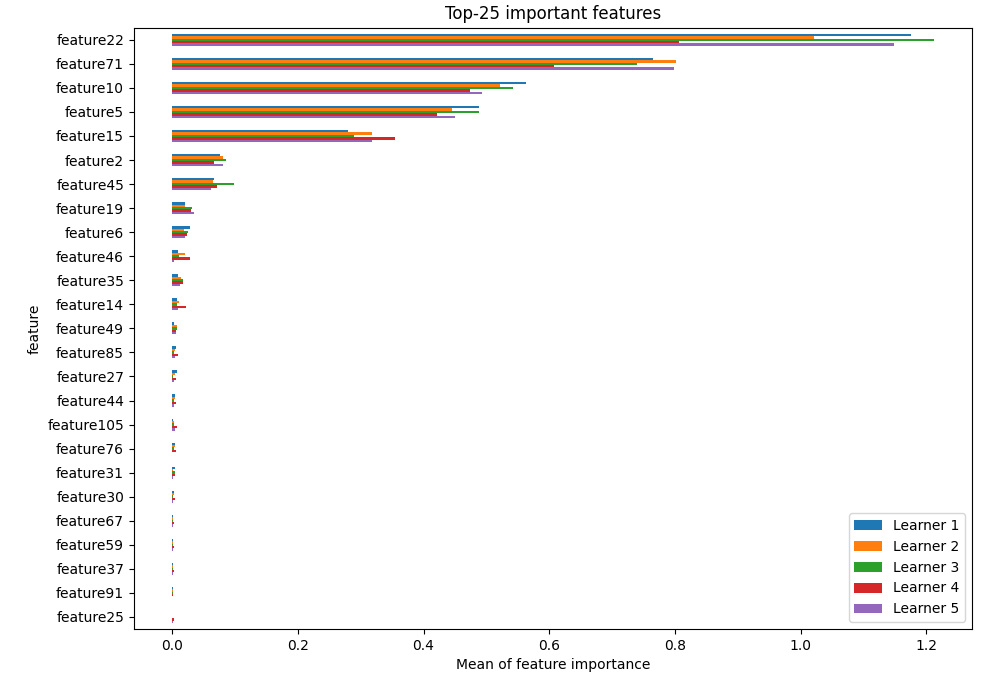

Permutation-based Importance

Confusion Matrix

Normalized Confusion Matrix

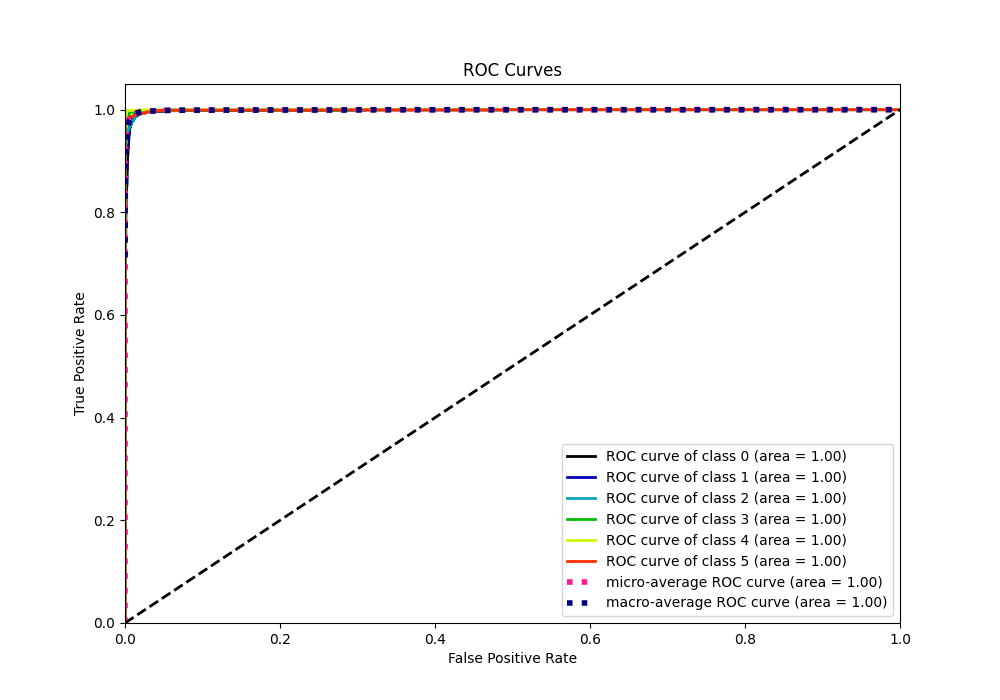

ROC Curve

Precision Recall Curve