Summary of 11_Xgboost

Extreme Gradient Boosting (Xgboost)

- n_jobs: 6

- objective: multi:softprob

- eta: 0.075

- max_depth: 5

- min_child_weight: 1

- subsample: 1.0

- colsample_bytree: 1.0

- eval_metric: f1

- num_class: 6

- explain_level: 1

Validation

- validation_type: kfold

- k_folds: 5

- shuffle: True

- stratify: True

- random_seed: 42

Optimized metric

f1

Training time

43.8 seconds

Metric details

| 0 | 1 | 2 | 3 | 4 | 5 | accuracy | macro avg | weighted avg | logloss | |

|---|---|---|---|---|---|---|---|---|---|---|

| precision | 0.970847 | 0.965429 | 0.973873 | 0.985663 | 0.991266 | 0.998435 | 0.980802 | 0.980919 | 0.980896 | 0.0583742 |

| recall | 0.987409 | 0.965429 | 0.969898 | 0.990226 | 0.992892 | 0.975038 | 0.980802 | 0.980149 | 0.980802 | 0.0583742 |

| f1-score | 0.979058 | 0.965429 | 0.971881 | 0.987939 | 0.992079 | 0.986598 | 0.980802 | 0.980497 | 0.980809 | 0.0583742 |

| support | 2462 | 1562 | 1960 | 1944 | 1829 | 1963 | 0.980802 | 11720 | 11720 | 0.0583742 |

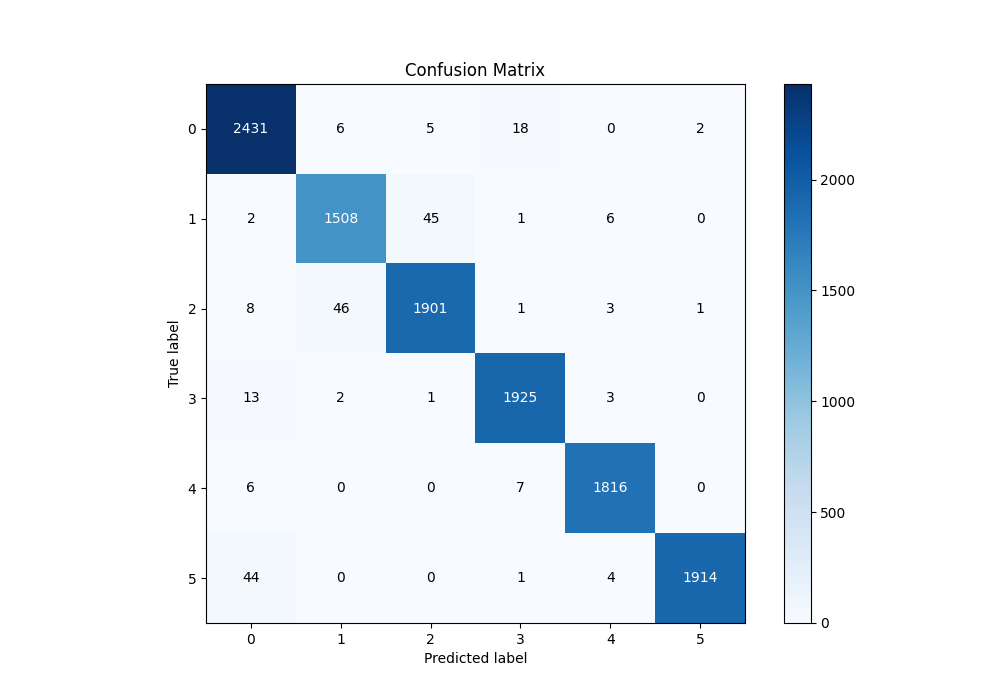

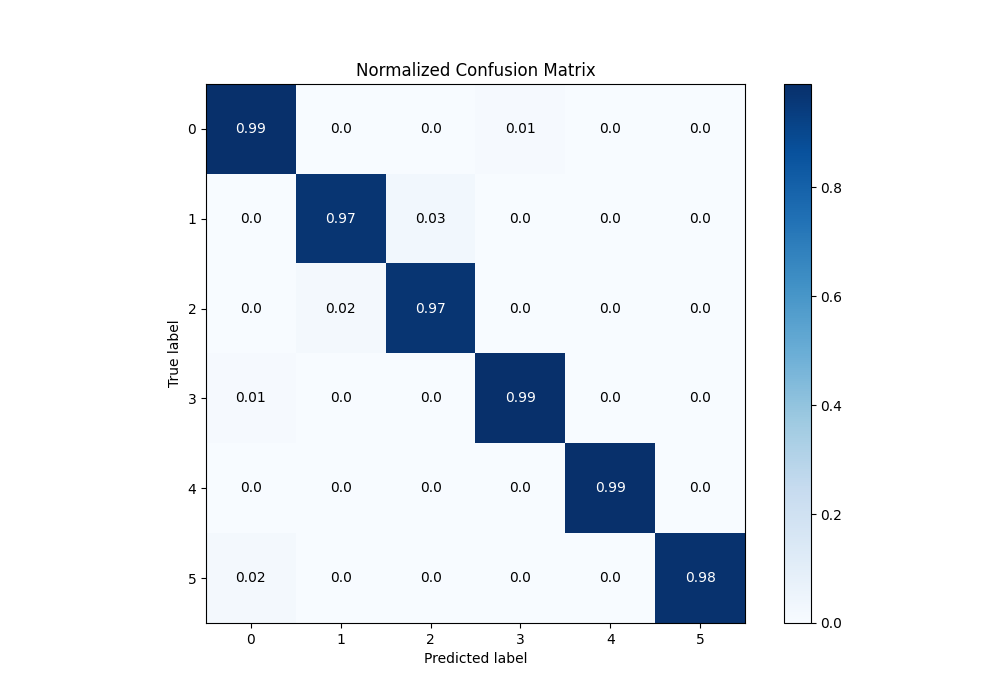

Confusion matrix

| Predicted as 0 | Predicted as 1 | Predicted as 2 | Predicted as 3 | Predicted as 4 | Predicted as 5 | |

|---|---|---|---|---|---|---|

| Labeled as 0 | 2431 | 6 | 5 | 18 | 0 | 2 |

| Labeled as 1 | 2 | 1508 | 45 | 1 | 6 | 0 |

| Labeled as 2 | 8 | 46 | 1901 | 1 | 3 | 1 |

| Labeled as 3 | 13 | 2 | 1 | 1925 | 3 | 0 |

| Labeled as 4 | 6 | 0 | 0 | 7 | 1816 | 0 |

| Labeled as 5 | 44 | 0 | 0 | 1 | 4 | 1914 |

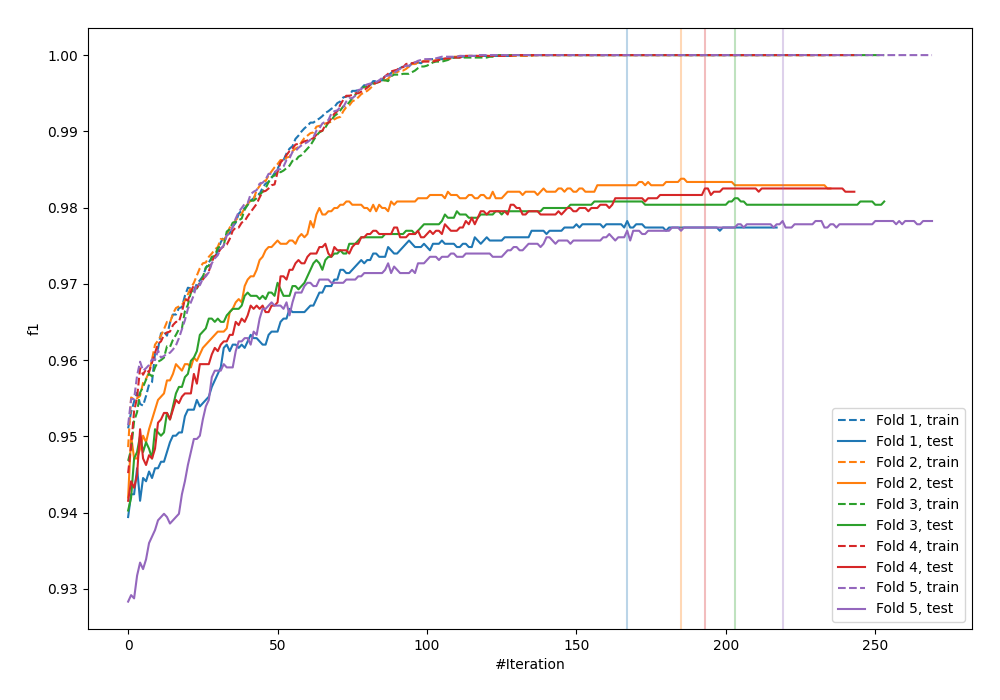

Learning curves

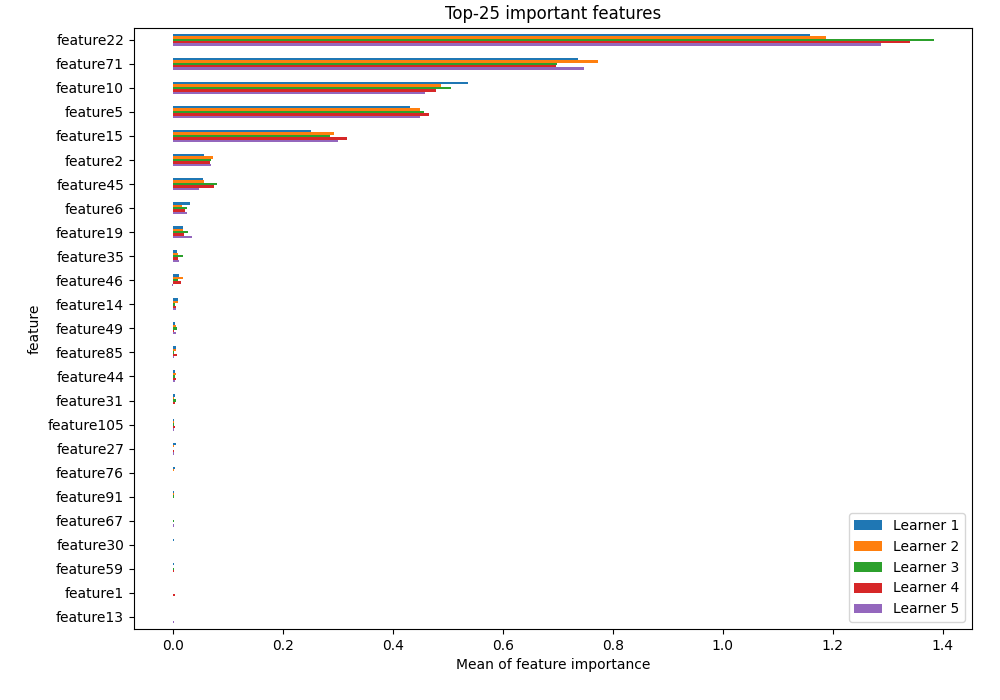

Permutation-based Importance

Confusion Matrix

Normalized Confusion Matrix

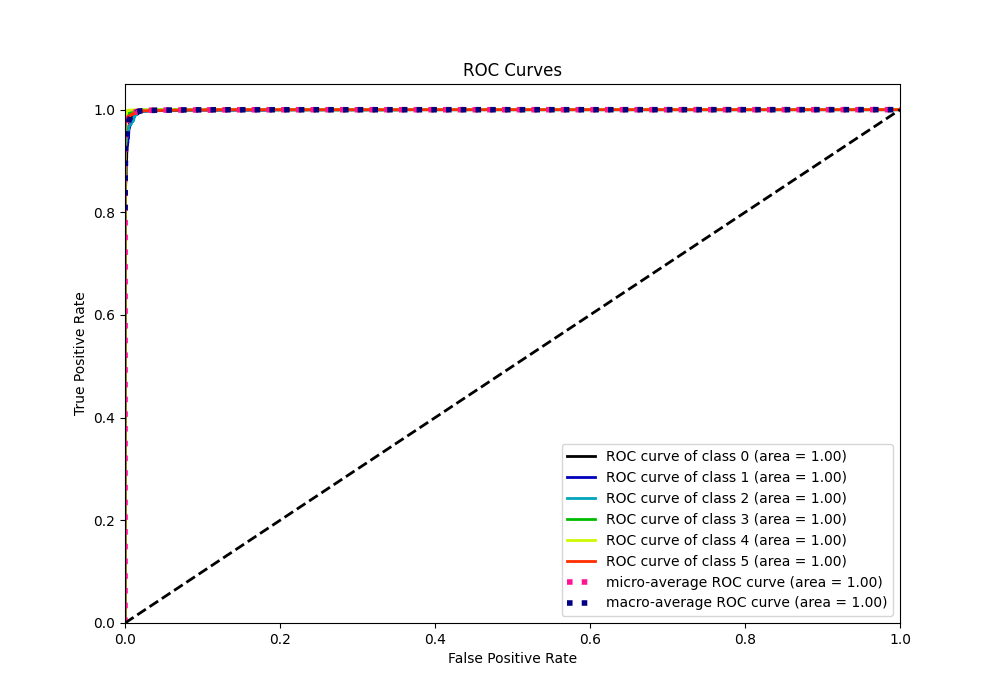

ROC Curve

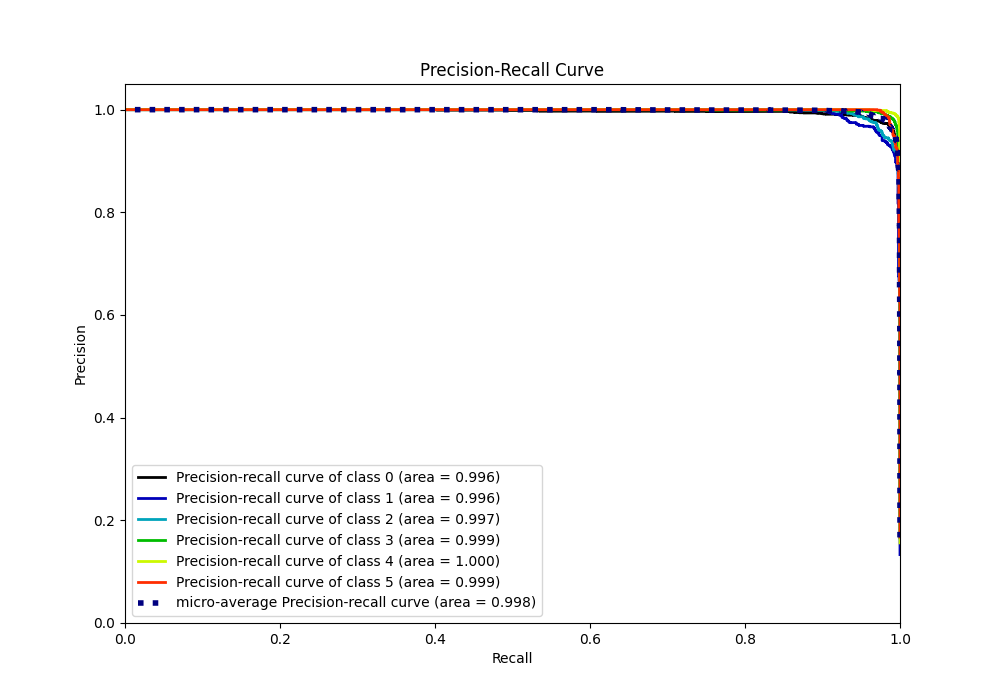

Precision Recall Curve