Summary of 10_Xgboost_SelectedFeatures

Extreme Gradient Boosting (Xgboost)

- n_jobs: 6

- objective: multi:softprob

- eta: 0.075

- max_depth: 7

- min_child_weight: 1

- subsample: 1.0

- colsample_bytree: 1.0

- eval_metric: f1

- num_class: 6

- explain_level: 1

Validation

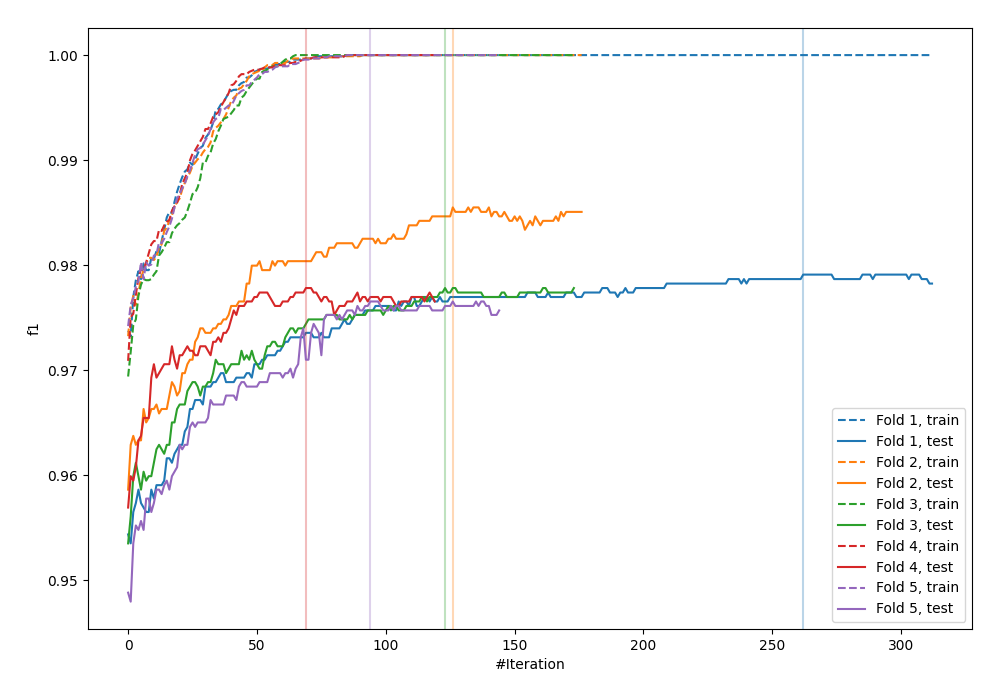

- validation_type: kfold

- k_folds: 5

- shuffle: True

- stratify: True

- random_seed: 42

Optimized metric

f1

Training time

39.5 seconds

Metric details

| 0 | 1 | 2 | 3 | 4 | 5 | accuracy | macro avg | weighted avg | logloss | |

|---|---|---|---|---|---|---|---|---|---|---|

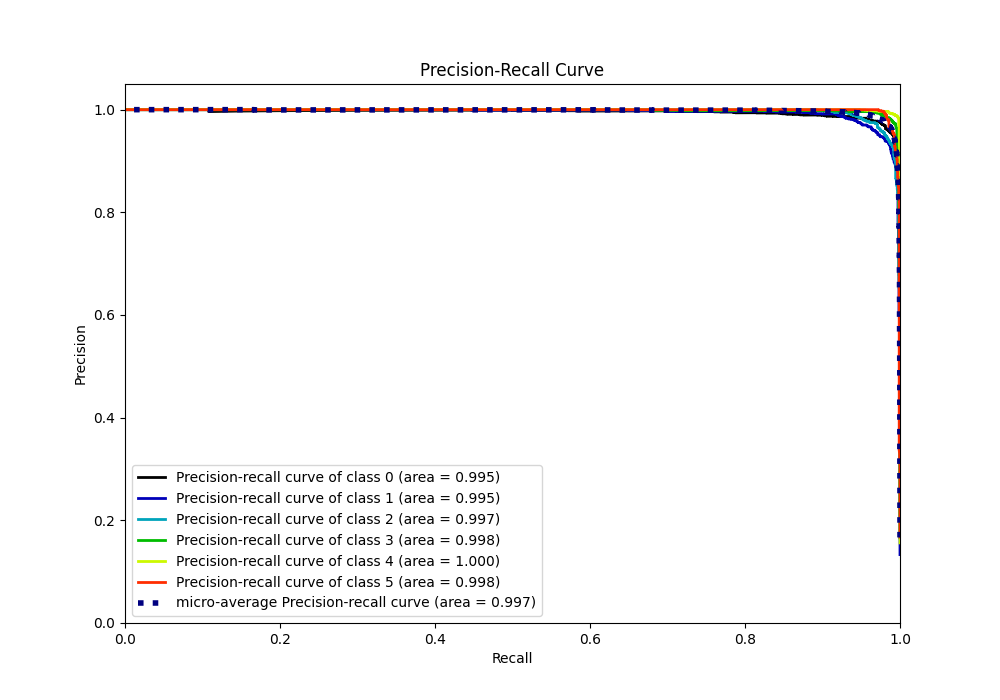

| precision | 0.966521 | 0.964126 | 0.973846 | 0.983632 | 0.99179 | 0.997914 | 0.979352 | 0.979638 | 0.979466 | 0.0662893 |

| recall | 0.984972 | 0.963508 | 0.968878 | 0.989198 | 0.990705 | 0.975038 | 0.979352 | 0.978716 | 0.979352 | 0.0662893 |

| f1-score | 0.975659 | 0.963817 | 0.971355 | 0.986407 | 0.991247 | 0.986344 | 0.979352 | 0.979138 | 0.979366 | 0.0662893 |

| support | 2462 | 1562 | 1960 | 1944 | 1829 | 1963 | 0.979352 | 11720 | 11720 | 0.0662893 |

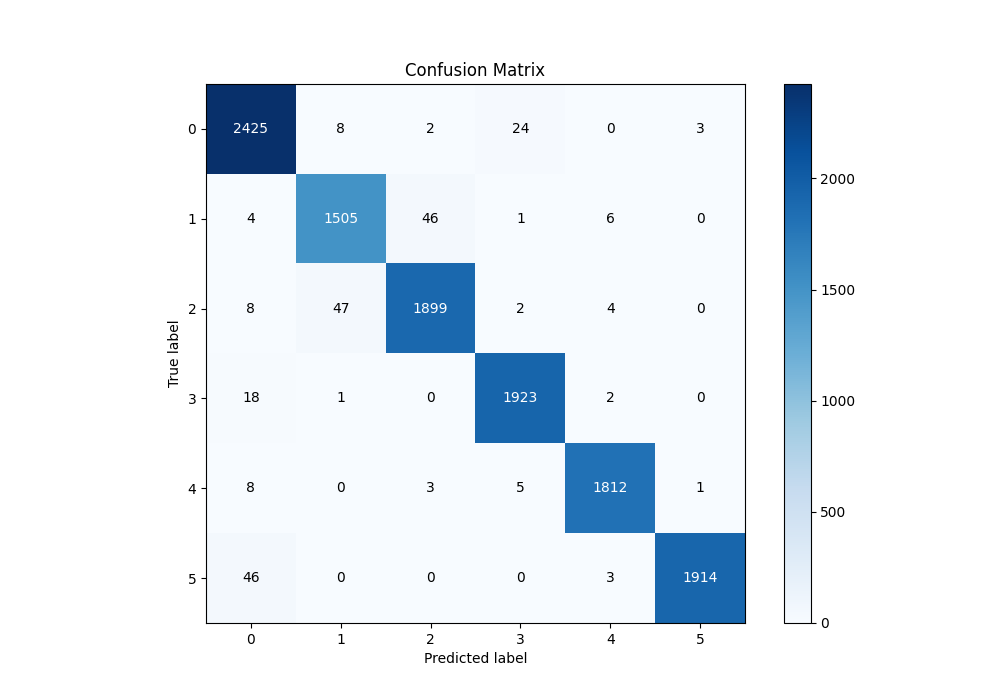

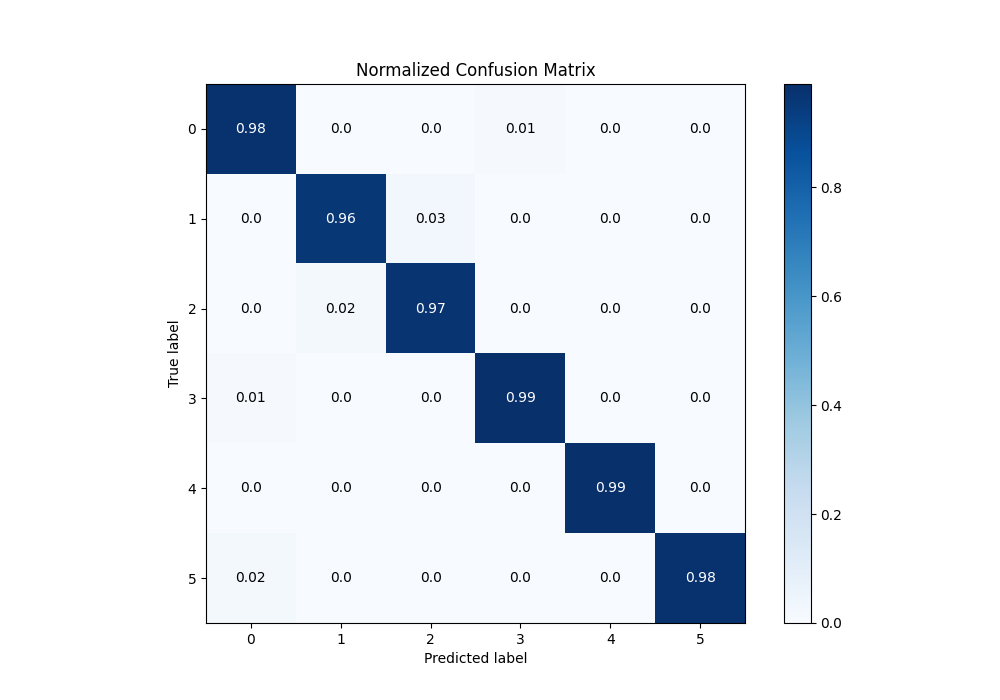

Confusion matrix

| Predicted as 0 | Predicted as 1 | Predicted as 2 | Predicted as 3 | Predicted as 4 | Predicted as 5 | |

|---|---|---|---|---|---|---|

| Labeled as 0 | 2425 | 8 | 2 | 24 | 0 | 3 |

| Labeled as 1 | 4 | 1505 | 46 | 1 | 6 | 0 |

| Labeled as 2 | 8 | 47 | 1899 | 2 | 4 | 0 |

| Labeled as 3 | 18 | 1 | 0 | 1923 | 2 | 0 |

| Labeled as 4 | 8 | 0 | 3 | 5 | 1812 | 1 |

| Labeled as 5 | 46 | 0 | 0 | 0 | 3 | 1914 |

Learning curves

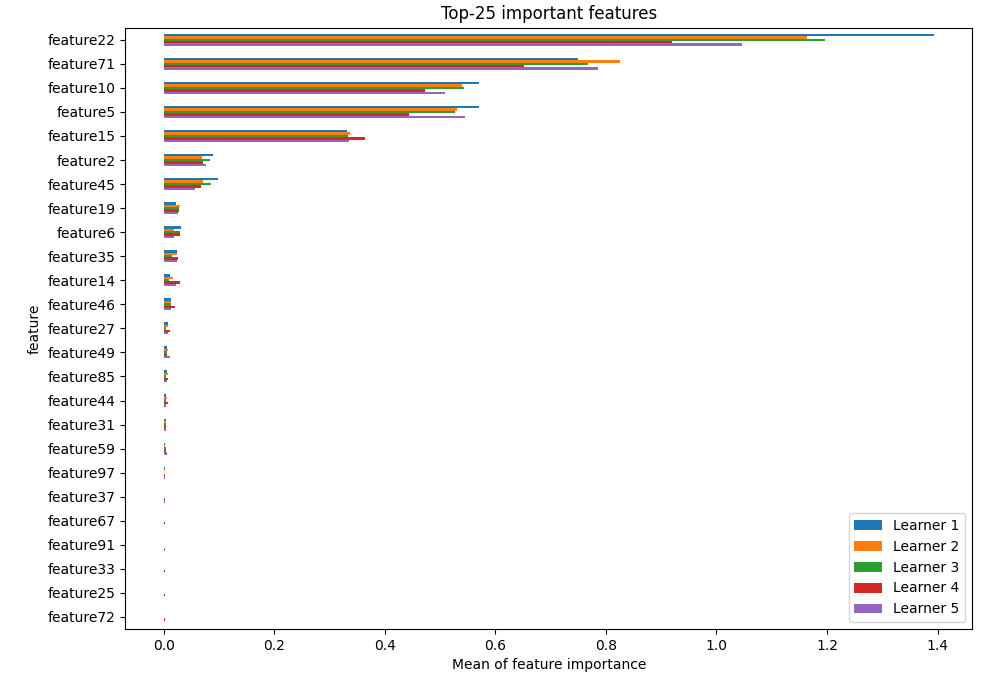

Permutation-based Importance

Confusion Matrix

Normalized Confusion Matrix

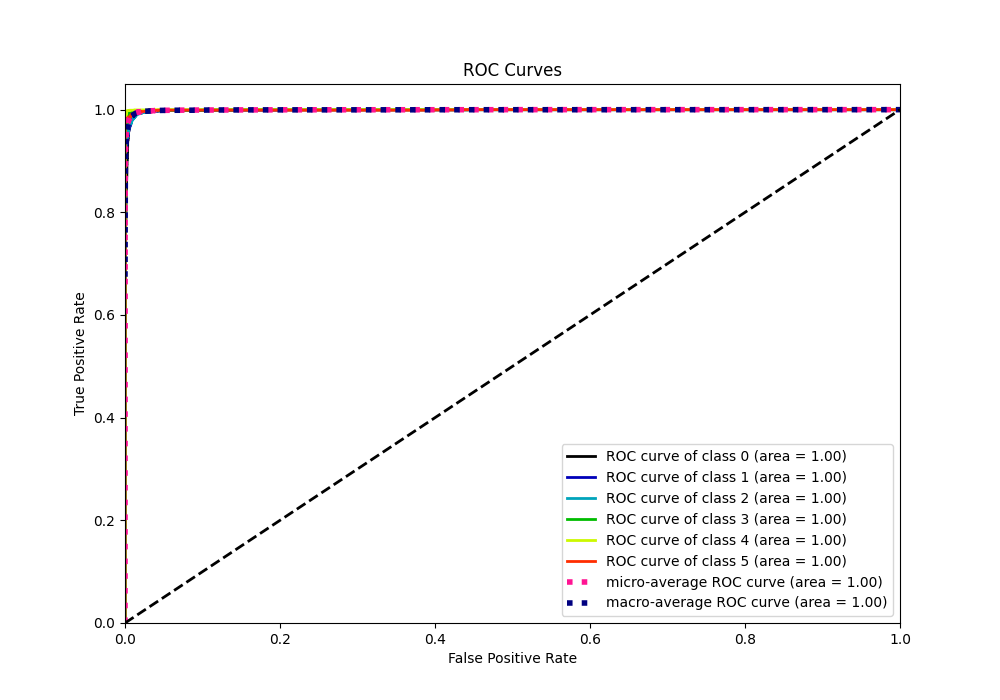

ROC Curve

Precision Recall Curve